- Joined

- Dec 3, 2009

- Messages

- 52,009

- Reaction score

- 33,944

- Location

- The Golden State

- Gender

- Male

- Political Leaning

- Independent

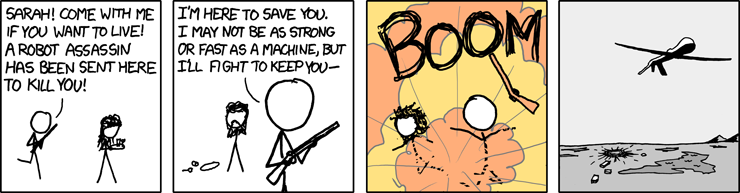

It's Happening: Drones Will Soon Be Able to Decide Who to Kill

Whereas current military drones are still controlled by people, this new technology will decide who to kill with almost no human involvement.

Once complete, these drones will represent the ultimate militarisation of AI and trigger vast legal and ethical implications for wider society.

No kidding. Science fiction is about to become science fact. Can you imagine the implications of this new technology?